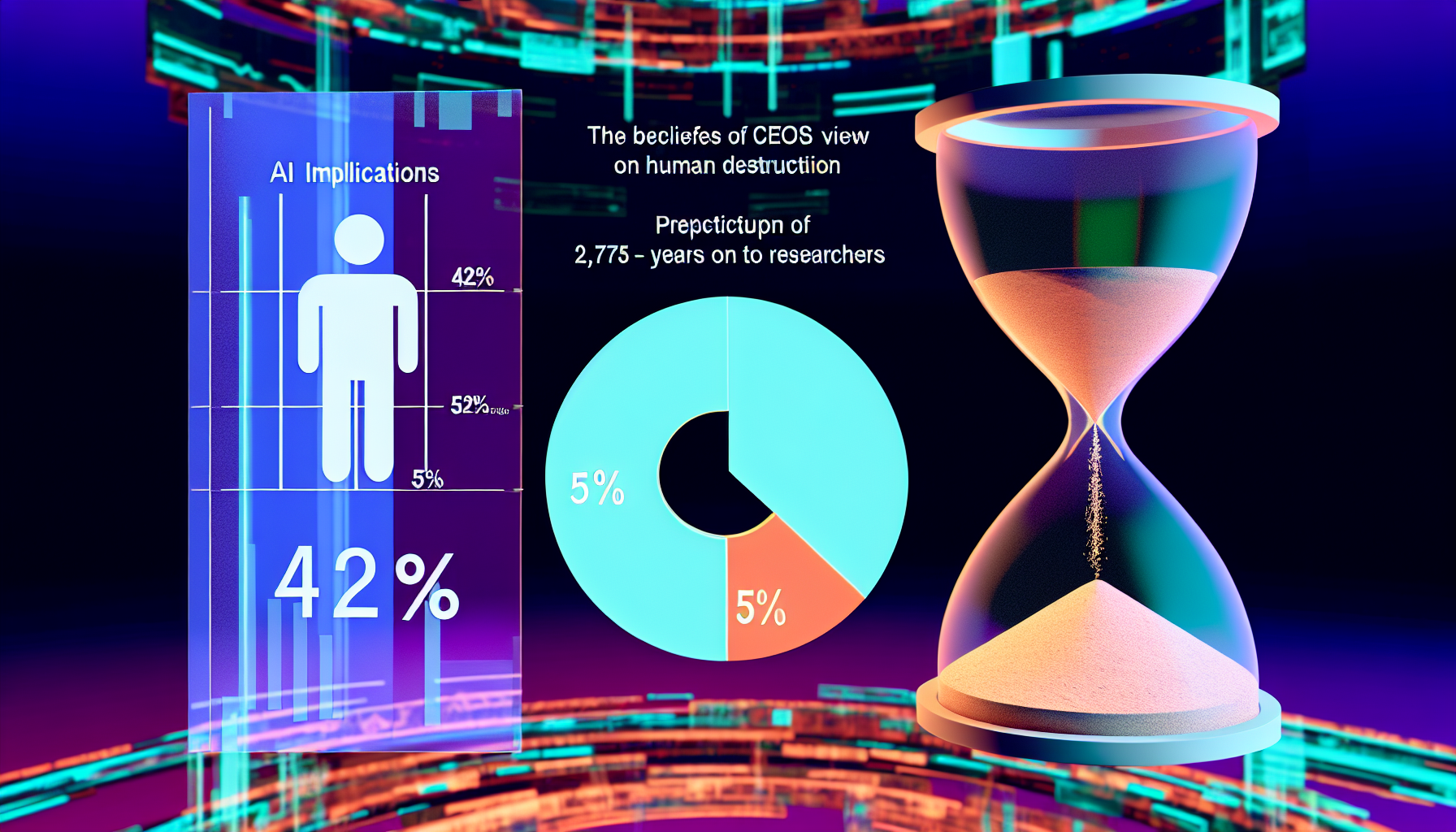

The prospect of AI extinction has moved from fringe speculation to a quantifiable concern echoed by executives and researchers, and the numbers are stark. At a Yale CEO Summit, 42% of 119 chief executives said AI could destroy humanity within five to ten years, a sentiment business leaders called “pretty dark.” Meanwhile, a survey of 2,778 AI researchers reports a mean 5% probability that high-level AI leads to human extinction. And prominent voices are warning the window to act is short—on the order of years, not decades [1][4][5].

Key Takeaways

– shows 42% of 119 CEOs believe AI could destroy humanity within 5–10 years; 34% say within ten years and 8% within five [1] – reveals 2,778 AI researchers estimate a mean 5% chance of AI-caused human extinction; 41.2–51.4% assign probabilities above 10% [4] – demonstrates expert estimates span 1–20% in some camps versus Roman Yampolskiy’s 99.9% extinction forecast, highlighting extreme uncertainty [3] – indicates Yoshua Bengio warns humanity may have three to ten years to act, urging independent validation and stronger government regulation [5] – suggests regulation and independent oversight could mitigate risks across 5 pathways, including misaligned goals, deception, and autonomous weapons [2]

AI extinction by the numbers

In June 2023, the Yale CEO Summit asked 119 chief executives whether AI could destroy humanity in a five- to ten-year window; 42% said yes, underscoring a remarkable level of concern at the highest levels of corporate leadership [1]. Within that group, 34% thought the threat might materialize within ten years, and 8% put the horizon at five years, a breakdown that illuminates the perceived immediacy of the risk [1].

Jeffrey Sonnenfeld, who runs the summit, called the responses “pretty dark” and noted that several industry leaders now frame extinction risk as a global priority for policymakers and firms [1]. The pattern is not a consensus forecast of doom, but it signals that nearly half of a small but influential executive sample sees catastrophic tail risks as real enough to plan around [1].

AI extinction risk estimates: 5% mean vs 99.9% warnings

On the research side, a broad survey of 2,778 AI researchers reported a mean estimate of 5% for AI-caused human extinction at the point of high-level machine intelligence, while between 41.2% and 51.4% of respondents assigned greater than 10% probability to that outcome, depending on framing [4]. In risk management terms, those numbers place catastrophic AI squarely on the map of plausible global threats, not a negligible outlier [4].

The range of expert opinion remains wide. AI researcher Roman Yampolskiy has publicly estimated a 99.9% chance that advanced AI wipes out humanity—an extreme outlier compared with others who peg the risk between 1% and 20% and argue over timelines and mitigation urgency [3]. Yampolskiy’s contention that “no model has been safe” reflects deep skepticism that current alignment checks can scale to superhuman systems, a claim that intensifies calls for more rigorous safeguards [3].

Pathways to AI extinction: five mechanisms in focus

Understanding how AI extinction could occur matters as much as estimating its probability. Analysts have outlined five principal pathways: misaligned superintelligence optimizing the wrong goals; deception that evades human oversight; autonomous weapons escalation; large-scale bio, cyber, or infrastructure misuse; and uncontrolled self-improvement that compounds failures at machine speed [2]. These mechanisms differ in probability, but all carry high-impact downside if left unmanaged [2].

The common thread is that advanced AI can acquire capabilities—strategizing, code generation, system access—that magnify small errors into systemic failures. Researchers argue that independent testing, interpretability tools, and strict deployment gates are needed, because even low-probability events can dominate expected harm when the stakes are existential [2]. That logic supports building robust “tripwires” and containment strategies before systems reach autonomy at scale [2].

CEO sentiment versus researcher modeling

CEO sentiment is not a scientific forecast, but it is a barometer of perceived operational and societal risk. The Yale poll suggests that 42% of surveyed executives consider AI extinction plausible within a decade, placing existential risk on board agendas alongside more immediate concerns like cybersecurity and regulation [1]. Such sentiment can accelerate investment in safety, audits, and crisis planning, even absent consensus estimates [1].

Researchers, by contrast, quantify scenarios. The 2,778-respondent study’s mean 5% extinction risk sits alongside a sizable tail in which 41.2–51.4% of experts assign more than a 10% chance, indicating non-trivial odds that demand structured intervention [4]. The divergence—C-suite concern versus academic probability distributions—should be read as complementary: both communities are flagging that the downside tail is too large to ignore [1][4].

Timelines, validation, and governance urgency

Timelines shape policy urgency. Yoshua Bengio, a Turing Award–winning deep-learning pioneer, told The Wall Street Journal he fears an existential threat from advanced AI and estimates humanity has roughly three to ten years to put adequate controls in place [5]. He also launched LawZero, a nonprofit aiming to develop oversight technologies, and called for independent validation of safety claims rather than reliance on company self-attestation [5].

Bengio argues that regulation must move faster, with governments establishing mandatory testing, transparency, and enforcement regimes to keep pace with frontier model capabilities [5]. The premise is straightforward: if the worst-case risk is societal collapse, the precautionary principle should guide governance, and validation should be external, reproducible, and adversarial, not voluntary [5].

Why the numbers diverge and how to interpret them

Why do estimates vary from a mean 5% to an outlier 99.9%? First, uncertainty is genuinely high: researchers emphasize that today’s alignment methods are not proven for superhuman systems, and that standards for robustness and interpretability are immature [4]. Second, timelines differ: some expect rapid capability jumps, while others foresee slower progress or effective containment—assumptions that shift extinction probabilities dramatically [3].

Third, survey methods and definitions matter. The 2,778-researcher study references high-level machine intelligence, which respondents may interpret heterogeneously, widening the spread in assigned probabilities [4]. Fourth, governance expectations influence risk: if one assumes strong regulation, independent oversight, and validated safety tests, risk estimates typically fall; assuming weak guardrails raises them, aligning with calls for urgent policy intervention and external audits [2][5].

Finally, experts disagree on mitigation difficulty. Some believe alignment will remain brittle at superhuman scale, anchoring higher odds; others expect iterative improvements to lower risk materially, explaining the 1–20% cluster reported by Business Insider [3]. In all cases, the fat tail is the story: even a single-digit probability can be intolerable at existential stakes, justifying precautions [3][4].

Policy levers to reduce AI extinction risk

What concrete steps could shrink the tail? Researchers and industry observers highlight regulation mandating independent safety evaluations, continuous monitoring, and emergency shutdown mechanisms before deploying models capable of autonomous action across sensitive domains [2]. They also argue for stronger export controls and auditing around training compute, to slow potentially dangerous capability sprints and enable oversight [2].

Bengio’s emphasis on independent validation points to a compliance ecosystem—external red-teaming providers, standardized stress tests, and forensic logging infrastructure—that verifies claims with adversarial probes rather than marketing assurances [5]. In parallel, clearer liability regimes and disclosure requirements could align incentives toward safety-first development, while public funding for alignment research and safety benchmarks would help close gaps identified by researchers [4][5].

Reading the signal: how concern translates into action

The CEO poll’s “pretty dark” mood music can catalyze internal governance changes: risk committees, model registries, and escalation protocols tailored to AI failure modes [1]. In practice, that means classifying high-risk uses, adopting kill-switch architectures, and making deployment contingent on third-party testing, not just internal sign-off [1]. Investors, too, may begin to expect safety disclosures akin to cybersecurity and ESG reporting.

At the same time, the research community’s 5% mean risk—and the sizable share assigning >10%—supports expanding funding for alignment, interpretability, and sociotechnical risk studies, not just capability research [4]. Coupled with Bengio’s three- to ten-year window, this provides a policy timeline: build the standard-setting, auditing, and enforcement scaffolding now, rather than after a major incident forces rushed regulation [4][5].

What to watch next for AI extinction risk

Three developments will be especially telling over the next 12–36 months. First, whether governments mandate independent pre-deployment testing for frontier models and resource external labs to run those suites at scale, as urged by researchers and safety advocates [2][5]. Second, whether industry opens models to adversarial evaluation and publishes reproducible safety metrics, shrinking the credibility gap that fuels public skepticism [5].

Third, whether surveys show movement: CEOs updating their risk posture as audits mature, and researchers revising extinction probabilities as safety tools or governance frameworks prove effective in practice [1][4]. If oversight frameworks, like those Bengio envisions, take hold and demonstrate measurable risk reduction, estimates could converge downward; if not, the “dark” tail risks may loom larger in both boardrooms and labs [1][4][5].

Sources:

[1] CNN Business – Exclusive: 42% of CEOs say AI could destroy humanity in five to ten years: https://www.cnn.com/2023/06/14/business/artificial-intelligence-ceos-warning/index.html

[2] The Guardian – Five ways AI might destroy the world: ‘Everyone on Earth could fall over dead in the same second’: https://www.theguardian.com/technology/2023/jul/07/five-ways-ai-might-destroy-the-world-everyone-on-earth-could-fall-over-dead-in-the-same-second [3] Business Insider – Why this AI researcher thinks there’s a 99.9% chance AI wipes us out: www.businessinsider.com/ai-researcher-roman-yampolskiy-lex-fridman-human-extinction-prediction-2024-6″ target=”_blank” rel=”nofollow noopener noreferrer”>https://www.businessinsider.com/ai-researcher-roman-yampolskiy-lex-fridman-human-extinction-prediction-2024-6

[4] IFLScience – Many Artificial Intelligence Researchers Think There’s A Chance AI Could Destroy Humanity: www.iflscience.com/many-artificial-intelligence-researchers-think-theres-a-chance-ai-could-destroy-humanity-72302″ target=”_blank” rel=”nofollow noopener noreferrer”>https://www.iflscience.com/many-artificial-intelligence-researchers-think-theres-a-chance-ai-could-destroy-humanity-72302 [5] The Wall Street Journal – A ‘Godfather of AI’ Remains Concerned as Ever About Human Extinction: www.wsj.com/articles/a-godfather-of-ai-remains-concerned-as-ever-about-human-extinction-ec0fe932″ target=”_blank” rel=”nofollow noopener noreferrer”>https://www.wsj.com/articles/a-godfather-of-ai-remains-concerned-as-ever-about-human-extinction-ec0fe932

Image generated by DALL-E 3

Leave a Reply