Attraction goes beyond looks. New evidence shows multimodal attraction—how voices, scents, and motion fuse with facial cues—shapes who we find appealing, trustworthy, and interesting. Rather than a single metric of “good looks,” the latest studies indicate attraction emerges from shared traits, personal preferences, and subtle non-verbal signals that interact across senses. From 2025 experimental data to EEG readouts and motion-capture results, the numbers suggest faces alone are an incomplete predictor of real-world allure, calling for broader models of mate choice and social selection that integrate multiple channels of information [1][2].

Key Takeaways

– shows audio–video stimuli rated highest while body odour lowest in 61 agents and 71 perceivers; multimodal integration significantly boosted attractiveness judgments in 2025. – reveals 128‑channel EEG found pleasant fragrance raised attractiveness, confidence and femininity ratings versus odorless air, with early N1/N2 modulations in 2024 experiments. – demonstrates movement matters: 37 female walkers and 75 raters linked dynamic gait and waist‑to‑hip ratio to higher attractiveness; BMI altered dynamic cues more. – indicates natural biological motion draws larger pupils and slower disengagement than mechanical motion across experiments, signaling heightened attention and arousal in 2019 findings. – suggests cross‑modal integration in superior temporal sulcus produces super‑additive responses, aligning vocal, olfactory, and visual signals of health and compatibility since 2017.

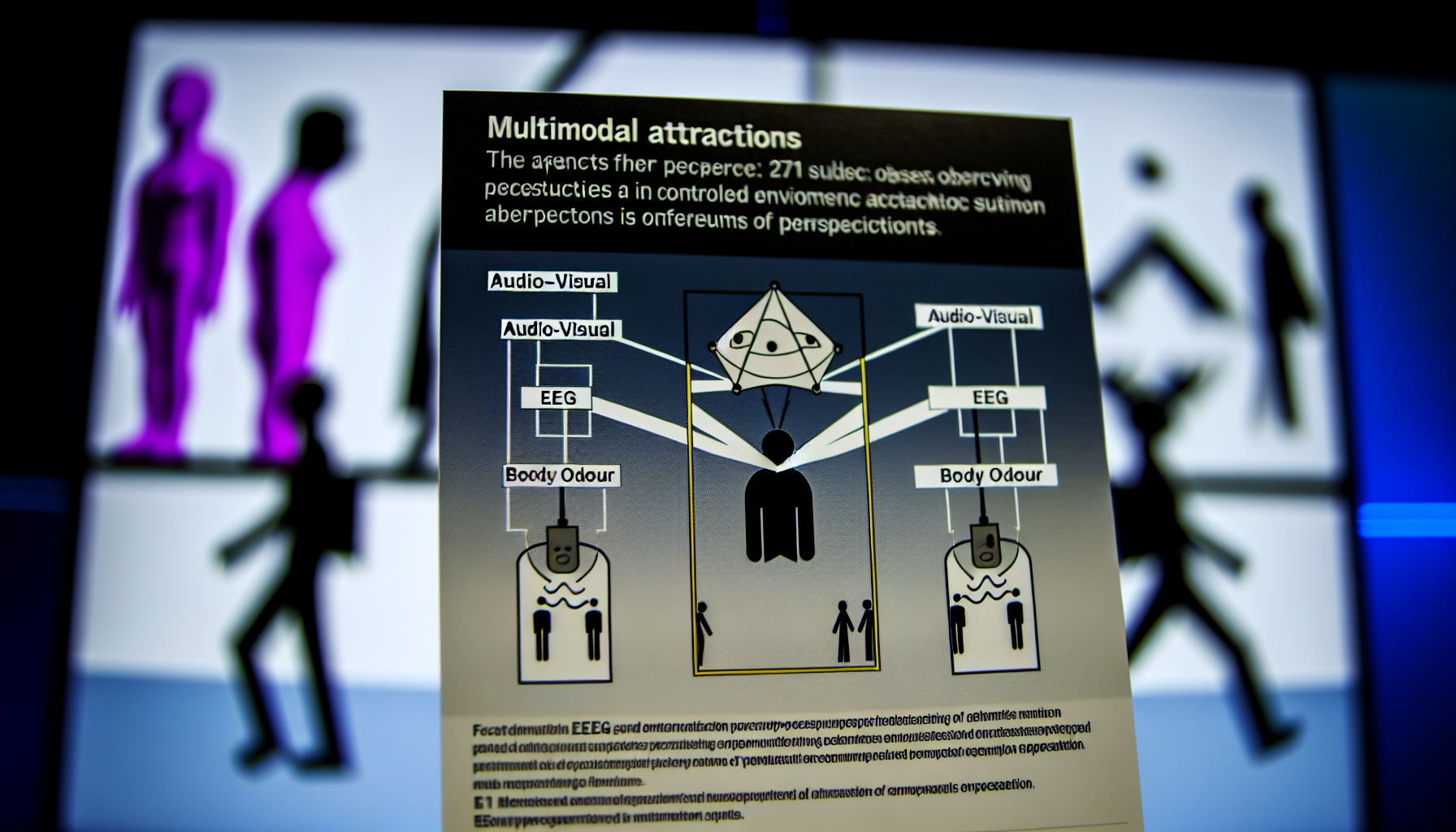

How multimodal attraction changes ratings across senses

A 2025 study reported a clear ranking of modalities: audio–video pairings were rated the most attractive, while isolated body odour received the lowest ratings. The experiment included 61 “agents” (the people being judged) and 71 “perceivers” (the judges), enabling cross-person modeling of both shared standards and idiosyncratic preferences. Crucially, combining cues increased perceived attractiveness beyond single-channel inputs, consistent with “multimodal integration” effects observed across perception research [1].

The same study uncovered correlations between modalities—voice and movement aligned in meaningful ways—suggesting people emit coherent, cross-sensory profiles that perceivers can read. Put differently, a voice that sounds confident or warm may mirror motion that looks fluid or energetic, helping perceivers form faster, more consistent judgments. Researchers also flagged sex differences in cue weighting, meaning men and women may prioritize the mix of voice, movement, scent, and facial information differently when evaluating others [1].

Beyond central tendencies, the 2025 data emphasize personal preference. Two perceivers can disagree sharply about the same individual once voice timbre, movement dynamics, or odour enter the equation. Statistical models that incorporated multimodal inputs better predicted “who likes whom” than face-only analyses. The conclusion is straightforward: attractiveness is not a one-dimensional trait; it is a composite outcome produced by the integration of multiple sensory cues interacting with shared norms and individual taste [1].

Motion and voice: dynamic signals that shift judgments

Static images miss a lot. In a motion-capture study of 37 female walkers rated by 75 observers, dynamic gait cues significantly influenced attractiveness alongside static factors such as body shape. Waist-to-hip ratio (WHR) remained predictive across conditions, but body mass index (BMI) more strongly affected dynamic cues, illustrating how weight distribution and movement interact to alter perceived health, vitality, and appeal when bodies are in motion rather than at rest [4].

These findings dovetail with attention research showing humans are preferentially tuned to natural biological motion. When participants viewed human-like motion versus mechanical motion, they exhibited larger pupil dilations and slower attentional disengagement—physiological signatures of heightened arousal and engagement. The results indicate that natural motion captures visual resources more strongly, making dynamic social signals hard to ignore and potentially biasing attractiveness judgments toward people whose movements look especially coordinated or lively [5].

Voice adds a parallel channel: pitch, timbre, and prosodic modulation convey hormones, dominance, and even health. While the 2018 motion study did not manipulate voice, the 2025 multimodal data found voice–movement correlations, implying that dynamic vocal and kinematic traits contribute jointly to perceived attractiveness. In application, this means a resonant voice paired with fluid, confident movement can lift overall judgments more than either cue alone, consistent with additive or super-additive integration across modalities [1][4][5].

The brain and behavior behind multimodal attraction

A landmark 2017 review argued that attractiveness is fundamentally multimodal, with visual, vocal, and olfactory cues each signaling aspects of mate quality—from hormone profiles to immunogenetic compatibility. The review highlighted the superior temporal sulcus (STS) as a hub for combining cues, producing super-additive neural responses when inputs align (for example, an attractive face paired with a congruent voice), and advocated pushing beyond face-centric paradigms in attractiveness science [2].

Neurophysiology backs that view. In 2024, a 128-channel EEG study showed that adding a pleasant fragrance systematically raised attractiveness, confidence, and femininity ratings for both self and other faces relative to odorless air. The odor also modulated early ERP components (N1, N2) and later activity, indicating that scent changes cortical processing within hundreds of milliseconds as the brain evaluates social stimuli. The authors, whose work was linked to industry funding, concluded that fragrance does not just decorate social impressions; it actively reshapes them at perceptual and decisional stages [3].

Bringing the pieces together, the brain appears wired to integrate cross-sensory social information rapidly and nonconsciously. Dynamic movement draws attention and arousal, voice supplies hormonal and dominance cues, and odor carries compatibility and health signals; when these align, perceivers often report stronger, faster, and more consistent judgments. Such integration likely underpins the 2025 finding that audio–video pairings outperform single channels, and helps explain why scent manipulations can tip ratings even when facial information is constant [1][2][3][5].

Real-world implications of multimodal attraction

For dating, first impressions are more than profile photos. Adding short video clips or voice prompts likely improves predictive validity over still images alone, reflecting the 2025 finding that multimodal inputs are rated higher and judged more consistently than single-modality cues. Platforms that optimize brief yet informative voice and motion snippets could produce matches that align better with in-person chemistry, reducing attrition after the first meeting [1].

In consumer and brand contexts, olfactory design and soundscapes can shift perceived warmth, confidence, and appeal. The 2024 EEG study’s fragrance manipulation boosted attractiveness and confidence ratings while altering early cortical processing, suggesting that scent strategies may influence impressions before consumers are consciously aware. Ethical deployment requires transparency, but the data indicate that subtle multisensory nudges can measurably shape social evaluations in retail, hospitality, and workplace settings [3].

Hiring and leadership assessments may also benefit from multimodal evaluation, while guarding against bias. Voice cadence, movement fluency, and even ambient scent can sway perceived competence and charisma—sometimes independent of actual performance. Structured interviews that standardize exposure (e.g., consistent audio quality, similar movement contexts) and train raters to recognize cross-modal bias could help ensure that judgments track skills rather than incidental sensory factors [1][2][5].

Limits, caveats, and what to test next

The new synthesis is compelling but not definitive. The 2025 experiment’s sample—61 agents and 71 perceivers—supports robust modeling of individual differences yet remains modest, and the odour condition may underrepresent ecological scent cues encountered in daily life. Reported sex differences in cue weighting call for larger, cross-cultural samples to map how preferences vary by context, age, and socioecology, including non-heterosexual populations often under-sampled in attractiveness research [1].

Methodologically, future work should preregister hypotheses, combine lab-grade EEG or fMRI with naturalistic audio–video–odour stimuli, and capture real-world outcomes (dates, friendships, team performance) rather than lab ratings alone. Integrating motion-capture, acoustic analysis, and chemical profiling within the same participants would allow true multimodal models to quantify how cues interact—testing super-additive versus additive effects directly and validating whether neural integration signatures predict enduring attraction outside the lab [2][3][4][5].

Sources:

[1] British Journal of Psychology – Attraction in every sense: How looks, voice, movement and scent draw us to future lovers and friends: https://pmc.ncbi.nlm.nih.gov/articles/PMC12256730/

[2] Frontiers in Psychology – Attractiveness Is Multimodal: Beauty Is Also in the Nose and Ear of the Beholder: https://www.frontiersin.org/articles/10.3389/fpsyg.2017.00778/full [3] Neuroscience / ScienceDirect – Pleasant and unpleasant odour-face combinations influence face and odour perception: An event-related potential study: https://www.sciencedirect.com/science/article/abs/pii/S0166432824000883

[4] Visual Cognition – Something in the way she moves: biological motion, body shape, and attractiveness in women: https://www.tandfonline.com/doi/full/10.1080/13506285.2018.1471560 [5] Cognition and Emotion / PubMed – Human body motion captures visual attention and elicits pupillary dilation: https://pubmed.ncbi.nlm.nih.gov/31352014/

Image generated by DALL-E 3

Leave a Reply