A new open-source speech-to-speech translation pipeline pairs voice-preserving conversion (RVC) with high-fidelity lip-syncing (Wav2Lip) to create dubbed videos that look and sound native. Built from publicly available components and reproducible scripts, the system emphasizes measurable performance: sub-200 ms voice-conversion latency, minimal training data, and pragmatic pre-processing that keeps synchronization clean. By combining mature research with 2023–2025 developer tooling, the pipeline shows speech-to-speech dubbing is now practical for individual creators and small teams.

Key Takeaways

– Shows near-real-time viability: RVC voice conversion reports ~170 ms end-to-end latency, dropping to ~90 ms with ASIO drivers for low-latency audio workflows. – Reveals minimal data demands: convincing target voices can be trained with roughly 10 minutes of audio, cutting collection costs and accelerating experiments significantly. – Demonstrates credible lip-sync: Wav2Lip, published in 2020, achieved “almost as good as real” accuracy on LRS2 with released code, checkpoints, and demos. – Indicates robust defaults: 480p video resizing and 16 kHz audio extraction stabilize throughput, reducing artifacts and easing cross-device reproducibility in dubbing. – Suggests accessible adoption: Windows, macOS, and Ubuntu support, MIT licensing for RVC, and Colab resources enable rapid onboarding for 2023–2025 implementers.

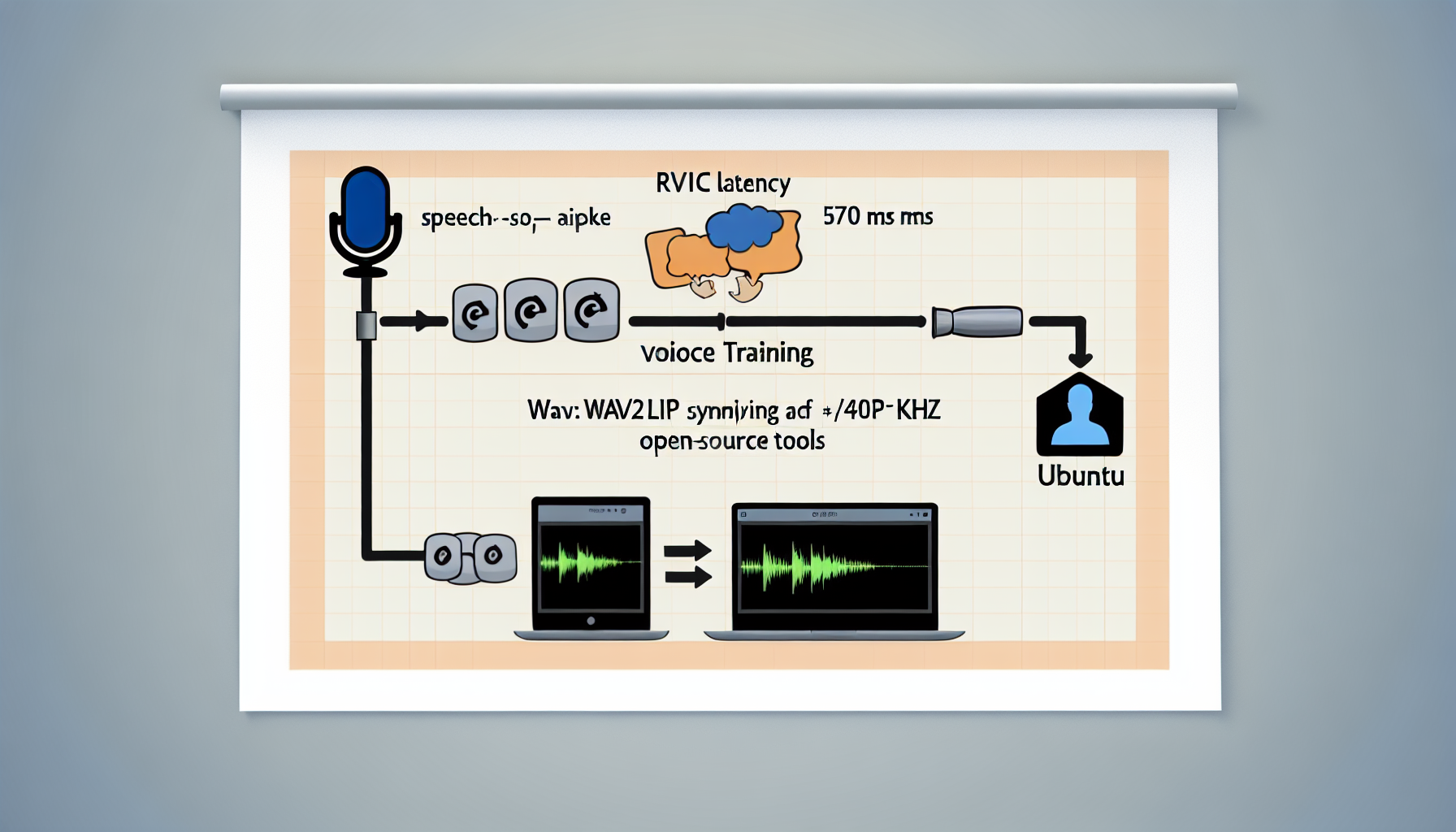

Inside the speech-to-speech pipeline

The pipeline follows a clear sequence. First, transcribe the source speech with an ASR model, then translate the transcript into the target language. Next, synthesize the translated text to audio, and finally apply voice conversion to match the original speaker’s timbre before lip-syncing the output video.

Using this chain keeps control over each stage. The ASR step sets transcript quality; the translation stage handles terminology and tone; the TTS step governs clarity and prosody; RVC imparts a preserved voice identity; Wav2Lip aligns the mouth with the generated audio. Each component is replaceable, letting teams iterate without breaking the full system.

Reproducibility improves with pragmatic defaults. A recent how-to recommends resizing source video to 480p by default and extracting audio at 16 kHz, alongside runnable scripts and Wav2Lip batch tuning guidance for stable inference at scale [5].

Why lip sync quality anchors credibility

Convincing dubbing rests on mouth movements that match phonemes. Wav2Lip addresses this by learning a dedicated lip-sync discriminator and operating robustly “in the wild.” The original research, released on August 23, 2020 and presented at ACM Multimedia on October 12, 2020, reported lip-sync accuracy “almost as good as real” on LRS2 and shipped code, checkpoints, and benchmarks to validate results [1].

That rigor matters. In practical use, a strong lip-sync model reduces uncanny artifacts that distract viewers, particularly at lower resolutions common in social and enterprise pipelines. The discriminator-guided training adds resilience across lighting, head poses, and varied speaking styles.

Voice preservation with RVC and low-latency conversion

Voice identity is the second pillar. Retrieval-based Voice Conversion (RVC) can train a target voice with as little as 10 minutes of audio, dramatically lowering the threshold for custom dubbing. The project’s WebUI is MIT-licensed and supports NVIDIA, AMD, and Intel acceleration, reporting end-to-end real-time latency around 170 ms—and near 90 ms when using ASIO for audio I/O [3].

Those figures enable near-live sessions, interactive reviews, and rapid iteration on dubbing reels without specialized hardware. Model fusion and flexible backends further help creators tune timbre or add warmth, brightness, or age characteristics while preserving intelligibility.

Because voice conversion layers on top of TTS, teams can swap in multilingual synthesizers and still keep the original on-screen speaker’s vocal fingerprint. That decoupling is a practical advantage over end-to-end black boxes, especially when you need to control terminology, pronunciation, or emotions.

Deployment options for speech-to-speech dubbing

Wav2Lip’s official GitHub repository ships training and inference code, pretrained checkpoints, and Colab notebooks, along with practical usage notes such as padding (“pads”) tuning and example commands. A key compliance detail: models trained on the LRS2 dataset are marked for non-commercial use, with an HD commercial option available via Sync Labs for production scenarios [2].

In practice, that means teams should audit which checkpoints they load and confirm the license path that matches their use case. The repo’s examples remain valuable for prototyping, benchmarking, and learning effective parameter choices before production hardening.

Installation, OS support, and community know-how

For RVC, community guides streamline setup and everyday workflows. A widely used explainer walks through installation, FFmpeg integration, Colab notebooks, and best practices. It highlights support for Windows, macOS, and Ubuntu, and connects developers to an active community and model hubs to accelerate experimentation in 2023–2024 and beyond [4].

These resources reduce friction on heterogeneous teams. Cloud-first and desktop-first contributors can share notebooks, checkpoints, and logs, keeping experiments harmonized across operating systems and drivers.

Quantifying speech-to-speech performance

Performance in speech-to-speech dubbing depends on the slowest stage. The RVC conversion stage is competitive, with reported latency around 170 ms—and as low as ~90 ms under ASIO—leaving headroom for ASR, translation, and TTS in near-real-time contexts.

Pre-processing choices also affect throughput. Resizing to 480p reduces the pixel load for face detection and mouth-region processing, often stabilizing lip-sync inference without a visible quality penalty in social and enterprise workflows that target 480p–720p deliverables. Similarly, extracting audio at 16 kHz standardizes sample rates and reduces compute during both voice conversion and sync.

Batch sizing and padding matter. Wav2Lip exposes parameters for temporal padding and batch size; conservative values reduce alignment errors on fast head movements, while larger batches improve GPU utilization on static shots. The balance depends on content type: talking-head material tolerates more batching than footage with frequent cuts.

For voice identity, training with approximately 10 minutes of clean speech is a pragmatic baseline. More minutes help in edge cases—noisy rooms, extreme prosody, or unusual timbres—but the headline finding is that short datasets already yield convincing conversions. That changes project scoping: collecting a high-quality 10-minute sample becomes a one-afternoon task, not a multi-day exercise.

Governance, rights, and responsible use

Two governance points deserve emphasis. First, checkpoint licensing: Wav2Lip checkpoints tied to LRS2 have non-commercial terms, so teams must select compliant models or license an approved alternative before deploying monetized services. Second, voice rights: even when the tooling is MIT-licensed, consent and usage scope for the target voice should be explicit and documented.

Operationally, keep a manifest that logs source audio duration (for example, 10 minutes), collection dates, consent artifacts, model versions, and pipeline parameters (480p, 16 kHz). That record supports auditability, reproducibility, and user trust.

Practical build notes for speech-to-speech teams

A clean, end-to-end build typically follows this recipe: extract audio at 16 kHz from the source video; transcribe and translate; synthesize target-language audio; convert the synthesized audio to the original speaker’s timbre with an RVC model trained from a ~10-minute voice set; resize video to 480p; run Wav2Lip to align the mouth region; mux the converted audio back into the video.

Monitor three checkpoints along the way. After translation, spot-check names and acronyms; after TTS, adjust pacing and emphasis; after voice conversion, confirm timbre and clarity on difficult phonemes. Finish with a lip-sync pass and a final mux, targeting your distribution’s standard bitrate and container.

What’s next for builders

The path forward is iterative hardening: curate 10–20 minute voice sets for high-visibility speakers; standardize 480p/16 kHz pre-processing to guarantee consistency; bookmark a low-latency RVC stack for reviews and a quality-first stack for final renders; and align licensing and consent workflows. With these guardrails, speech-to-speech dubbing moves from research demo to dependable production capability across marketing, learning, and product support.

Sources:

[1] arXiv / ACM Multimedia (MM ’20) – A Lip Sync Expert Is All You Need for Speech to Lip Generation In The Wild: https://arxiv.org/abs/2008.10010

[2] GitHub (Rudrabha/Wav2Lip) – Wav2Lip (official repository) — training and inference code with checkpoints: https://github.com/Rudrabha/Wav2Lip [3] GitHub (RVC-Project) – Retrieval-based Voice Conversion WebUI: https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI

[4] Hugging Face (blog / docs) – What is Retrieval‑based Voice Conversion WebUI?: https://huggingface.co/blog/Blane187/what-is-rvc [5] Medium (tutorial) – Speech-to-Speech Translation with Lip Sync: https://medium.com/@srikarvardhan2005/speech-to-speech-translation-with-lip-sync-425d8bb74530

Image generated by DALL-E 3

Leave a Reply